In this article I will outline an idea I have had for an infinitely scalable, Turing complete, layer 2 scaling concept for Bitcoin. I’ll call it Buzz.

Buzz is influenced by a number of ideas, in particular Ethereum’s VM, sharding, tree chains, weak blocks and merge mining. I’ll be discussing Bitcoin, but the Buzz scaling concept could be implemented on any PoW blockchain, and without Turing completeness.

How does it work?

Buzz is merge mined with Bitcoin and has its own blockchain called Angel which serves as a gateway (through two way peg) between the two different systems. In addition to merge mined blocks, Angel has a second (lower) difficulty which enables more frequent block creation, say every 30 seconds (weak blocks).

Buzz lifts its Turing completeness from Ethereum, taking much of its development but with a few adaptations to enable infinite scaling.

Ethereum’s current plans for scaling is through proof of stake with 80 separate shards. All shards communicate through a single master shard. Each shard has up to 120 validators who are randomly allocated to a shard and vote with their stake to reach consensus on block creation.

Buzz is quite radically different in its approach. It depends upon PoW which is elegant, resilient and has no cap on the number of consensus participants or minimum stake requirements. It avoids a split into an arbitrary number of shards with an arbitrary number of validators, and where all shards are homogenised and lacking diversity, such as different block creation times for different use cases.

In Buzz, each shard is called a wing and has its own blockchain. There is no cap on the number of wings, anybody can create one. Instead of making design decisions, the free market decides whether a wing will succeed or not. If there’s a wing with high transaction volume and high transaction fees, it will attract a higher number of miners and a higher level of security.

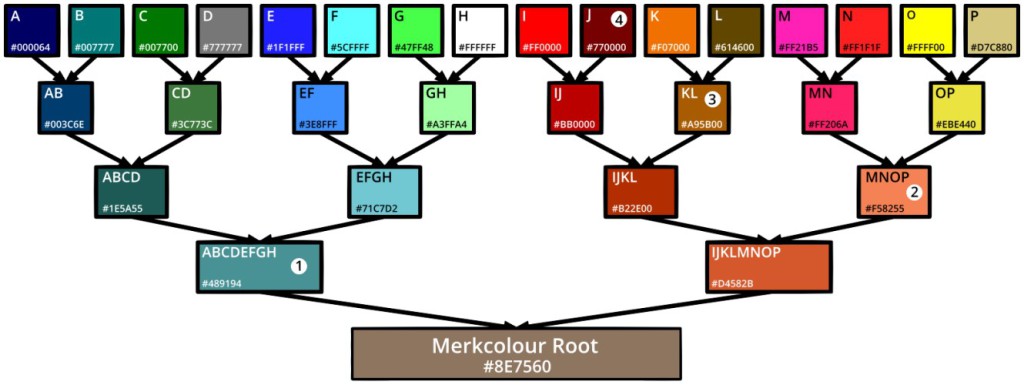

Buzz is basically a blockchain tree, since each wing can have multiple child wings, and the Angel sits at the top of the tree overseeing everything and communicating with the ‘other world’ that is the main Bitcoin blockchain.

The higher up the tree you go, the higher the hashrate will be, since a hashrate for a wing is equal to the hashing power of mining on all descendant wings combined with the mining on that wing but no deeper. Data and coins can be transferred up and down the tree between the parent and child wings. How frequently this can occur depends on the difference in hashrate between the child and parent. Nodes can operate and mine on any wing, but are required to also maintain the blockchains of any parent wings, up to and including the Angel.

The process of creating a wing is as trivial as getting a create wing transaction included in an Angel block. As well as being a gateway, Angel also serves as a registry, a little like DNS, keeping track of the properties of all wings.

If you want to create a wing with a block size of 1000MB and creation time of 1 second, that’s no problem. The market will probably decide your blockchain isn’t viable and you’ll be the only node on it.

Each wing will have its own difficulty level. If it was created with a 5 minute block target, its difficulty will be determined through the same process existing blockchains use.

There are no new coins created in this system, the incentive to mine comes from transaction fees.

The Angel has the hashing power of the entire Buzz network. Transactions here are the most secure, but also the most expensive. Angel itself has a limited functionality and conservative block size since this is the only blockchain that every full node is required to process.

How are wings addressed?

Every wing has its own unique wingspace address, a little bit like IP addresses and subnets.

For example, a wing that is a direct child (tier 1) of the Angel might have been registered with the wingspace address a9e. It might have a tier 2 child wing at the wingspace address a93:33, which might have a tier 3 child wing at a93:33:1a, and so on. If you’re familiar with subnets, this is like 255.0.0, 255.255.0 and 255.255.255.

There may be thousands of active and widely used wings, or there may only be a handful of wings which have a huge transaction volume. The free market will decide the tradeoff between hardware requirements, hash rate and transaction fees.

There may be geographically focused wings to benefit from lower latency, and micropayment wings which become the defacto standard for day to day use in a particular country. Travelling may involve moving some coins to participate on the wing where local transactions take place. Such routing could be automated and seamless to the end user.

Instead of trying to second guess a one size fits all solution for blockchain scaling, Buzz takes inspiration from the approach taken by the internet, where an IP address could represent anything from server farm to a raspberry pi, depending on the use requirements. The idea is to just create a solid protocol which enables the consistent transfer of coins and data across the system.

Different types of activity can take place in wings more suited to their use case. The needs of the network now may be completely different in the future, and allowing all wings to have a number of definable parameters and to be optimised for particular use cases (such as storage, or gambling) allows the network to evolve over time.

How are blocks mined?

Miners must synchronise the blockchain of the wing they are mining, and all parent wings up to and including Angel. Nodes can fully synchronise and validate as many blockchains as they like, or operate as light clients for ones they use less frequently.

In order to mine, hashes and blocks are created with a different method to Bitcoin.

The data that is included to generate a hash must also include the wingspace address and a public key.

Instead of hashing a Merkle root to bind transaction data to a block, a public key is hashed. Once a hash of sufficient difficulty to create a block has been found, the transactions data will be added, and then the block contents signed by the private key that corresponds with the public key used to generate the hash.

By signing rather than hashing blockchain specific data, we enable a single hash to be used in multiple wings (as it is not tied to a particular wings’ transactions) as long as it meets the difficulty requirement for each parent.

Since transaction data is not committed to the hash as in Bitcoin (where it is as a result of hashing the Merkle root), there needs to be a disincentive to publishing multiple versions of a block using the same hash signed multiple times. This can be achieved by allowing miners to create punishment transactions which include signed block headers for an already redeemed hash. Doing so means that miner gets to claim the associated fees, and the miner who published multiple versions of a block is punished by losing the reward.

When generating a hash, miners must include the previous block hash and block number for all tiers of wings they are mining on. This will allow all parents wings to have a picture of the number of child wings and their hash power.

Hashing in the wingspace a9e:33:1a, means that if a hash of sufficient difficulty was found, the miner could use it to create a block in the wings a9e:33:1a, a9e:33 and a9e. If the difficulty was high enough to create a block in the Angel, it means that wingspace will effectively ‘check in’ with Angel, and provide useful data so its current current hash rate can be determined and provide an overview of the health of wings. If a wing has not mined a block of Angel level difficulty in x amount of time, the network might consider the wing to have ‘died’.

If you had 30 second blocks in the Angel, over 1 million a year, that means even a wing with just 1 millionth (0.0001%) of the network hashing power should be able to ‘check in’ annually.

It is likely that this check in data will enable miners to identify which wings are the most profitable to mine on, and the network will dynamically distribute hash power accordingly. There will be less need to mine as a pool, since there will be many wingspaces to mine in, which should enable even the smallest hashrate to create blocks and earn fees. Miners can mine in multiple wingspaces at the same time with a simple round robin of their hash power.

Creating and modifying wings

When a create wing transaction is included in an Angel block, the user can specify a number of characteristics for the wing, such as:

- Wingspace address

- Wing title and description

- Initial difficulty

- Block creation time

- Difficulty readjustment rules

- Block size limit

- Permitted Opcodes

- Permitted miners

Hashing a public key and signing blocks with the corresponding private key allows us to do something else a little bit different: permissioned wings.

Most wings will be permissionless as blocks can be mined by anybody. However let’s say a casino or MMOG wants to create its own wing. It might want to do this so that it can have properties of a faster block creation time and it can avoid transaction fees by processing transactions for free, since they are the only permitted miner.

By only allowing blocks signed by approved keys, permissioned wings cannot be 51% attacked, and could even mine at a difficulty so low it is hashed by a CPU while retaining complete security. Users will recognise that in transferring coins into a permissioned wing, there is a risk that withdrawal transactions will be ignored, though their coins would not be spendable by anyone else so there is little incentive for doing so. It is up to them to decide whether the benefits outweigh the risks.

The property of permissioned block creation could be used for new wings which are vulnerable to 51% attacks due to a low hashrate. Permitted miners could be added until the wing was thought to have matured to a point where it is more resilient, and the permission requirement could be removed.

Angel transactions can also be created to modify wing parameters. Say changing to a different block creation interval at x block in the future. The key used to create a wing can be used to sign these modification transactions. Once a wing has matured, the creator can sign a transaction that waves their right to alter the wing any further, and its attributes become permanent.

How is this incentivised?

Transactions have fees. By creating blocks miners claim those fees. If you mine a hash of difficulty to generate a hash all the way up to the Angel blockchain, you collect fees on each level for all blocks you created.

There exists the possibility to add a vig, say 20% of transaction fees, to be pooled and passed up to the parent wing. These fees would gradually work their way up to the Angel blockchain, and could be claimed as a reward for merge mined blocks with the main Bitcoin blockchain.

Other ideas

Data and coins can be passed up and down the wings using the same mechanism Ethereum is planning with its one parent shard and 80 children topology. However there is no mechanism to pass data sideways between sibling wings, as sibling wings are not aware of each other.

I wonder however, if wings could be created to accept hashes from multiple block spaces. For example wing BB might accept also hashes, at say a 10 minute difficulty, from the wingspaces for AA and CC.

It would be possible to calculate the required difficulty level because all parent wings up to the Angel must be synchronised, and information about sibling wings will be included in block headers on the parent wing. This potentially creates a mechanism where wings can pass data between each other more efficiently and at lower cost, though I think there may be technical limitations with this system, in particular for transferring coins. This is because as far as the parent is concerned, coins transferred directly from BB to CC seem to have appeared out of thin air when passed back from CC to the parent, as the parent wing cannot see the activities of its children.

Thoughts on Proof of Work

Wings cannot merge mine with the main Bitcoin network without a hard fork so that it accepts hashes in the Buzz format.

This hard fork would enable the PoW to be shared, and offer increased security for both systems. Maintaining a separate proof of work between the systems, however, presents the opportunity to diversify the options available, such as Scrypt, SHA-256, X11 and Ethash.

If you wanted 30 second blocks on Angel with 4 proofs of work methods you could give each proof of work method its own difficulty targeting a 120 second block time.

When creating a wing, particular proofs of work could then be specified for use on that wing. Such as 20% Ethash and 80% Scrypt. This would open up PoW methods to the free market too.

Summary

- Buzz is merge mined and distinct from the main Bitcoin blockchain

- Recognises that there are infinite potential use cases for blockchains, with varying design requirements.

- It is impossible to expect every node to process every transaction. There needs to be segmentation.

- Attempting a one size fits all approach to scaling will lead to suboptimal and restrictive designs for many use cases.

- Buzz creates a system where different segments with different properties can exist side by side and transfer coins and data, facilitating a free market where the best blockchains can thrive.

Thanks for reading through my idea, I hope the swirl of ideas buzzing around my head make sense now they have been converted into words. For any questions, thoughts or criticisms, please head to the comments.