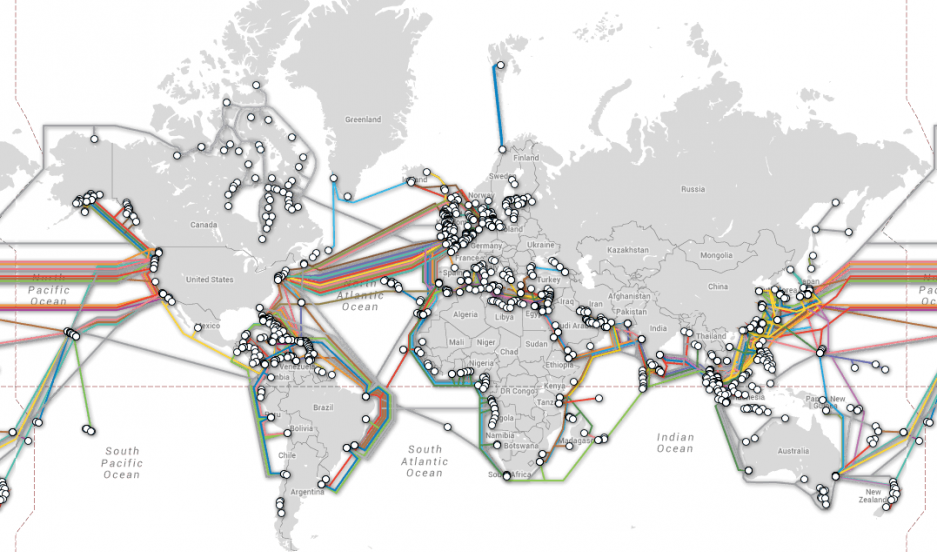

Bitcoin blocks are full. Every 10 minutes, around 1MB of transactions make their way into the block chain, the network is at capacity.

The community is divided on what happens next. Little do they know that what seems like a simple choice between competing clients could turn into a plot worthy of Hollywood with subterfuge, subversion and excitement in abundance.

The Core team of developers are behind the software that has powered Bitcoin for the last 7 years. Their plan, Segregated Witness, is to start moving some of the transaction data outside the blocks to create more space inside them and can be introduced through a soft fork.

The Core developers also have a revolutionary idea, the Lightning Network, which they say will allow Bitcoin to scale substantially without creating huge and centralising demands on the block size.

A new team of developers, named Classic, think this is will not happen quickly enough, and want to create additional capacity now by simply doubling the block size through a hard fork.

I previously explained the difference between a soft and hard fork, but for this article all you need to know is that a hard fork is generally considered more dangerous because people who don’t upgrade their software will no longer be connected to the same Bitcoin network as everyone else, which could create problems.

What is playing out is a battle of ideologies. One side believes in patiently planning for the future, the other thinks that short term capacity issues are far more pressing to prevent Bitcoin losing momentum.

In reality, the Bitcoin network could probably hard fork to 2MB blocks without major incident, and the network can probably operate at full capacity for a while without a major impact on growth. The doomsday scenario painted by either side is overblown, but since this battle dictates the future direction for Bitcoin, its importance is not.

Realising this is about power allows us to more accurately speculate on the dynamics that could play out. The first assumption to make is that as both sides believe they are right, they will use all tools available to them to secure their vision.

Things that may feel like a malicious attack to one side, would be considered a moral necessity to protect Bitcoin by the other. All actions are likely to be good intentioned by those carrying them out so try not to take them personally, remember, all parties believe they are acting in the best interests of Bitcoin.

With that in mind, let us speculate on how the Core team may react to Classic’s attempt to hard fork Bitcoin to 2MB blocks.

For a hard fork to be successful it needs consensus. A perfect hard fork would have 100% consensus, everyone would have updated their software before the fork activated and nobody would be separated from the main network.

On paper, it seems miners hold the power in determining whether a hard fork has consensus. A threshold is set for activation based on the number of recent blocks mined that support a fork, say 75%.

In reality, its not that simple. Its easy to measure miner support in this way, but even if 99% of miners are in favour, if nobody else changed their software, those miners would just be mining amongst themselves, their newly minted coins worthless. Everybody else continues using the existing network which can be considered to have ‘won’.

Even then, its not that simple. If a hard fork to Bitcoin Classic was successfully achieved through 100% miner consensus but nobody else upgraded their software, the existing network could not be considered to have ‘won’ because it is dependent upon miners to function. It would actually be rendered completely useless as no blocks would be created and no transactions could take place.

The most likely outcome is that if 75% of miners reached consensus, most of the remaining 25% would follow suit for fear of ‘losing out’. This is the most rational action to take, and consequently 75% should actually be enough to secure a successful hard fork.

The Core network would be rendered useless.

Even in the best case scenario for Core, that 25% of miners continued to work on the their side of the fork, seemingly against their economic interests, the network would still be severely disrupted. Instead of 10 minute blocks, it would take 40 minutes to process 1MB of transactions, the Classic network could handle 600% this capacity (2MB every 13.3mins). The Core network would be rendered useless.

Following defeat in a hard fork, how could Core cling on to some hope and stay in the game? The answer comes in the form of a new hard fork of their own, deployed very quickly.

That hard fork could introduce any number of ideas, many of which may have already been coded behind the scenes in anticipation. The absolute minimum requirement would be lowering the difficulty level so that the their network could continue to function.

The next priority might be to keep their network as closely synchronised with Classic’s as possible – the further the competing sides of the fork drift apart, the less likely it is anyone would ever switch away from the more dominant one.

To help stay synchronised, it would probably make sense for Core to increase the block size to 2MB. This sounds counter productive, since this is exactly what they have been trying to avoid, but it would be essential to keep their network as closely synchronised as possible and prevent transactions that wouldn’t fit into a 1MB block disappearing from their side of the fork.

This would be life support, Core would be limping along next to Classic, running a separate but similar network.

As long as Core still has some skin in the game, they will be seen as a threat by Classic. Miners from the Classic side of the fork could conspire to attack the Core network. With the Core difficulty level decreased, attacks against the network would be far easier.

This would not be a malicious attack, a reprisal for allowing the network to be split, it would simply be an act of self preservation by the miners to protect their investment and should be considered the most logical and inevitable action for them to take.

With that in mind, another change Core could introduce in a hard fork would be to alter the Proof of Work (PoW). One small change to one line of code would render useless every bit of specialised mining hardware the network currently uses, and help mitigate the risk of attack from the Classic network. Far from a nuclear option, it would be a logical act of self defence that could also gain support from the altcoin community, whose hardware could be useful under a different PoW.

Changing the PoW as an idea isn’t just hypothetical, it has already been coded, ironically by a Core developer, as suggestion for the Classic source code. This was more likely an early act of subterfuge than a serious proposal, a way for Core to flex their muscles and show there are options open to them and they could be willing to put up a fight.

So what would happen next? With updated software, the Core developers would have a Phoenix network, resurrected from the ashes. This new network would be viewed by the community as being decentralisation driven and backed by a group of talented developers with a proven track record. It is possible many would find such a network an attractive proposition, and the network could potentially compete with Classic.

history shows us that one format will triumph

This would not be the end of the world; divergence is not uncommon in new technologies. Betamax vs VHS; Bluray vs HD DVD, SD cards vs many others, history shows us that one format will triumph. Core vs Classic could become a noteworthy addition to the list, but ultimately one will win the format war of Bitcoin while the other will end up in the bargain bin with the rest of the altcoins.

What I have speculated would require alignment of a number of factors to be viable. Crucially, it would require sufficient appetite from the Core developers to put up such a potentially demanding and drawn out fight. While some may be keen for the challenge, without overwhelming developer consensus, the prospect of success is severely diminished.

More powerful at this stage is just the idea that they’d be prepared to put up a fight. Any miner that says they support Classic only needs a quiet word in their ear from someone in the Core team suggesting they might create a fork that risks making their millions invested in hardware worthless, and they just may be tempted to change their mind. Whether Classic can gain consensus, and whether Core would pursue this path remain to be seen, but if they did, it would make fascinating viewing.